The Full Stack, Part I

One of my most vivid memories from school was the day our chemistry teacher let us in on the Big Secret: every chemical reaction is a joining or separating of links between atoms. Which links form or break is completely governed by the energy involved and the number of electrons each atom has. The principle stuck with me long after I'd forgotten the details. There existed a simple reason for all of the strange rules of chemistry, and that reason lived at a lower level of reality. Maybe other things in the world were like that too. (Originally appeared on Facebook's Engineering blog and perfplanet.com.)

A "full-stack programmer" is a generalist, someone who can create a non-trivial application by themselves. People who develop broad skills also tend to develop a good mental model of how different layers of a system behave. This turns out to be especially valuable for performance & optimization work. No one can know everything about everything, but you should be able to visualize what happens up and down the stack as an application does its thing. An application is shaped by the requirements of its data, and performance is shaped by how quickly hardware can throw data around.

Consider this harmless-looking SQL statement:

INSERT INTO some_table VALUES (1, 2, 3);

What is the maximum number of these you can do per second? Well, it first depends on the storage engine you use. If the table is in MyISAM format a write may result in one more disk-seek or rotation than InnoDB. InnoDB stores the row data right after the index. With MyISAM indexes and data are stored in different files. A hard drive can only do one thing at a time, so this detail can make a big difference in performance. It also depends on what mechanisms are in place (write-through caches, journals, etc) to keep random seeks to a minimum, and whether you are using table-level or row-level locking. Digging deeper into how these storage engines work, you can find ways to trade safety for speed.

The shape of the data

One way to visualize a system is how its data is shaped and how it flows. Here are a some useful factors to think about:

- Working data size: This is the amount of data a system has to deal with during normal operation. Often it is identical to the total data size minus things like old logs, backups, inactive accounts, etc. In time-based applications such as email or a news feed the working set can be much smaller than the total set. People rarely access messages more than a few weeks old.

- Average request size: How much data does one user transaction have to send over the network? How much data does the system have to touch in order to serve that request? A site with 1 million small pictures will behave differently from a site with 1,000 huge files, even if they have the same data size and number of users. Downloading a photo and running a web search involve similar-sized answers, but the amounts of data touched are very different.

- Request rate: How many transactions are expected per user per minute? How many concurrent users are there at peak (your busiest period)? In a search engine you may have 5 to 10 queries per user session. An online ebook reader might see constant but low volumes of traffic. A game may require multiple transactions per second per user.

- Mutation rate: This is a measure of how often data is added, deleted, and edited. A webmail system has a high add rate, a lower deletion rate, and an almost-zero edit rate. An auction system has ridiculously high rates for all three.

- Consistency: How quickly does a mutation have to spread through the system? For a keyword advertising bid, a few minutes might be acceptable. Trading systems have to reconcile in milliseconds. A comments system is generally expected to show new comments within a second or two.

- Locality: This has to do with the probability that a user will read item B if they read item A. Or to put it another way, what portion of the working set does one user session need access to? On one extreme you have search engines. A user might want to query bits from anywhere in the data set. In an email application, the user is guaranteed to only access their inbox. Knowing that a user session is restricted to a well-defined subset of the data allows you to shard it: users from India can be directed to servers in India.

- Computation: what kinds of math do you need to run on the data before it goes out? Can it be precomputed and cached? Are you doing intersections of large arrays? The classic flight search problem requires lots of computation over lots of data. A blog does not.

- Latency: How quickly are transactions supposed to return success or failure? Users seem to be ok with a flight search or a credit card transaction taking their time. A web search has to return within a few hundred milliseconds. A widget or API that outside systems depend on should return in 100 milliseconds or less. More important is to maintain application latency within a narrow band. It is worse to answer 90% of queries in 0.1 seconds and the rest in 2 seconds, rather than all requests in 0.2 seconds.

- Contention: What are the fundamental bottlenecks? A pizza shop's fundamental bottleneck is the size of its oven. An application that serves random numbers will be limited by how many random-number generators it can employ. An application with strict consistency requirements and a high mutation rate might be limited by lock contention. Needless to say, the more parallelizability and the less contention, the better.

This model can be applied to a system as a whole or to a particular feature like a search page or home page. It's rare that all of the factors stand out for a particular application; usually it's 2 or 3. A good example is ReCAPTCHA. It generates a random pair of images, presents them to the user, and verifies whether the user spelled the words in the images correctly. The working set of data is small enough to fit in RAM, there is minimal computation, a low mutation rate, low per-user request rate, great locality, but very strict latency requirements. I'm told that ReCAPTCHA's request latency (minus network latency) is less than a millisecond.

A horribly oversimplified model of computation

How an application is implemented depends on how real computers handle data. A computer really does only two things: read data and write data. Now that CPU cycles are so fast and cheap, performance is a function of how fast it can read or write, and how much data it must move around to accomplish a given task. For historical reasons we draw a line at operations over data on the CPU or in memory and call that "CPU time". Operations that deal with storage or network are lumped under "I/O wait". This is terrible because it doesn't distinguish between a CPU that's doing a lot of work, and a CPU that's waiting for data to be fetched into its cache.[0] A modern server works with five kinds of input/output, each one slower but with more capacity than the next:

- Registers & CPU cache (1 nanosecond): These are small, expensive and very fast memory slots. Memory controllers try mightily to keep this space populated with the data the CPU needs. A cache miss means a 100X speed penalty. Even with a 95% hit rate, CPU cache misses waste half the time.

- Main memory (10^2 nanoseconds): If your computer was an office, RAM would be the desk scattered with manuals and scraps of paper. The kernel is there, reserving Papal land-grant-sized chunks of memory for its own mysterious purposes. So are the programs that are either running or waiting to run, network packets you are receiving, data the kernel thinks it's going to need, and (if you want your program to run fast) your working set. RAM is hundreds of times slower than a register but still orders of magnitude faster than anything else. That's why server people go to such lengths to jam more and more RAM in.

- Solid-state drive (10^5 nanoseconds): SSDs can greatly improve the performance of systems with working sets too large to fit into main memory. Being "only" one thousand times slower than RAM, solid-state devices can be used as ersatz memory. It will take a few more years for SSDs to replace magnetic disks. And then we'll have to rewrite software tuned for the RAM / magnetic gap and not for the new reality.

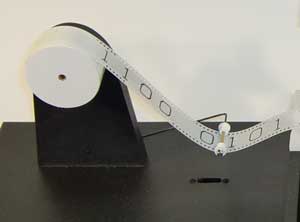

- Magnetic disk (10^7 nanoseconds): Magnetic storage can handle large, contiguous streams of data very well. Random disk access is what kills performance. The latency gap between RAM and magnetic disks is so great that it's hard to overstate its importance. It's like the difference between having a dollar in your wallet and having your mom send you a dollar in the mail. The other important fact is that access time varies wildly. You can get at any part of RAM or SSD in about the same time, but a hard disk has a physical metal arm that swings around to reach the right part of the magnetic platter.

- Network (10^6 to 10^9 nanoseconds): Other computers. Unless you control that computer too, and it's less than a hundred feet away, network calls should be a last resort.

Trust, but verify

The software stack your application runs on is well aware of the memory/disk speed gap, and does its best to juggle things around such that the most-used data stays in RAM. Unfortunately, different layers of the stack can disagree about how best to do that, and often fight each other pointlessly. My advice is to trust the kernel and keep things simple. If you must trust something else, trust the database and tell the kernel to get out of the way.

Thumbs and envelopes

I'm using approximate powers-of-ten here to make the mental arithmetic easier. The actual numbers are less neat. When dealing with very large or very small numbers it's important to get the number of zeros right quickly, and only then sweat the details. Precise, unwieldy numbers usually don't help in the early stages of analysis. [1]

Suppose you have ten million (10^7) users, each with 10MB (10^7) bytes of data, and your network uplink can handle 100 megabits (10^7 bytes) per second. How long will it take to copy that data to another location over the internet? Hmm, that would be 10^7 seconds, or about 4 months: not great, but close to reasonable. You could use compression and multiple uplinks to bring the transfer time down to, say, a week. If the approximate answer had been not 4 but 400 months, you'd quickly drop the copy-over-the-internet idea and look for another answer.

movies.example.com

So can we use this model to identify the performance gotchas of an application? Let's say we want to build a movies-on-demand service like Netflix or Hulu. Videos are professionally produced and 20 and 200 minutes long. You want to support a library of 100,000 (10^5) films and 10^5 concurrent users. For simplicity's sake we'll consider only the actual watching of movies and disregard browsing the website, video encoding, user comments & ratings, logs analysis, etc.

- Working data size: The average video is 40 minutes long, and the bitrate is 300kbps. 40 * 60 * 300,000 / 8 is about 10^8 bytes. Times 10^5 videos means that your total working set is 10^13 bytes, or 10TB.

- Average request size: A video stream session will transfer somewhere between 10^7 and 10^9 bytes. In Part One we won't be discussing networking issues, but if we were this would be cause for alarm.

- Request rate: Fairly low, though the concurrent requests will be high. Users should have short bursts of browsing and long periods of streaming.

- Mutation rate: Nearly nil.

- Consistency: Unimportant except for user data. It would be nice to keep track of what place they were in a movie and zip back to that, but that can be handled lazily (eg in a client-side cookie).

- Locality: Any user can view any movie. You will have the opposite problem of many users accessing the same movie.

- Computation: If you do it right, computation should be minimal. DRM or on-the-fly encoding might eat up cycles.

- Latency: This is an interesting one. The worst case is channel surfing. In real-world movie services you may have noticed that switching streams or skipping around within one video takes a second or two in the average case. That's at the edge of user acceptability.

- Contention: How many CPU threads do you need to serve 100,000 video streams? How much data can one server push out? Why do real-world services seem to have this large skipping delay? When multiple highly successful implementations seem to have the same limitation, that's a strong sign of a fundamental bottleneck.

It's possible to build a single server that holds 10TB of data, but what about throughput? A hundred thousand streams at 300kbps (10^5 * 3 * 10^5) is 30 gigabits per second (3 * 10^10). Let's say that one server can push out 500mbps in the happy case. You'll need at least 60 servers to support 30gbps. That implies about 2,000 concurrent streams per server, which sounds almost reasonable. These guesses may be off by a factor or 2 or 4 but we're in the ballpark.

You could store a copy of the entire 10TB library on each server, but that's kind of expensive. You probably want either:

- A set of origin servers and a set of streaming servers. The origins are loaded with disks. The streamers are loaded with RAM. When a request comes in for a video, the streamer first checks to see if it has a local cache. If not, it contacts the origins and reads it from there.

- A system where each video is copied to only a few servers and requests are routed to them. This might have problems with unbalanced traffic.

An unknown factor is the distribution of popularity of your video data. If everyone watches the same 2GB video, you could just load the whole file into the RAM of each video server. On the other extreme, if 100,000 users each view 100,000 different videos, you'd need a lot of independent spindles or SSDs to keep up with the concurrent reads. In practice, your traffic will probably follow some kind of power-law distribution in which the most popular video has X users, the second-most has 0.5X users, the third-most 0.33X users, and so on. On one hand that's good; the bulk of your throughput will be served hot from RAM. On the other hand that's bad, because the rest of the requests will be served from cold storage. A useful hack might be to keep the first minute of every video in RAM at all times.

Whatever architecture you use, it looks as though the performance of movies.example.com will depend almost completely on the number of concurrent reads your storage devices can handle. If I were building this today I would give both SSDs and non-standard data prefetching strategies a serious look.

It's been fun

This subject is way too large for a short writeup to do it justice. But absurd simplifications can be useful as long as you have an understanding of the big picture: an application's requirements are shaped by the data, and implementations are shaped by the hardware's ability to move data. Underneath every simple abstraction is a world of details and cleverness. The purpose of the big fuzzy picture is to point you where to start digging.

Notes

[0] Fortunately there is a newish tool for Linux called "perf counters".

[1] Jeff Dean of Google deserves a lot of credit for popularizing the "numbers you should know" approach to performance and systems work. As my colleague Keith Adams put it, "The ability to quickly discard bad solutions, without actually building them, is a lot of what good systems programming is. Some of that is instinct, some experience, but a lot of it is algebra."