Predicting the Future

Lately I've been using a definition of the future that seems to kick up interesting ideas. It's not original, but it is useful:

The future is a disagreement with the past about what is and is not important.

The differences between two ages are informed by politics, technology, demographics, etc. But they are easiest to understand in terms of what each age thinks is worth caring about.

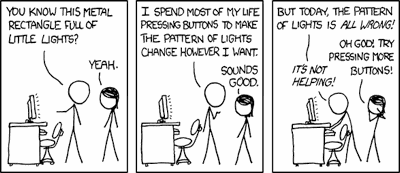

Knowing who your parents are is less important today than a couple hundred years ago. It doesn't cripple you socially as it once did. Knowing what material your water pipes are made of became suddenly important when we noticed the crippling effects of lead. Today we pay close attention to lab tests and labels and indicator lights that summarize what's really going on, because a lot of important stuff is too small or complex to comprehend directly. If I had to pick a major disagreement between the present world and the past, it would be the importance of invisible amounts of mass and energy, be they trace chemicals or transistors. Moreover we tend to care about emergent information content, the patterns in the material, rather than the actual material.

To a typical Victorian that wouldn't be heresy so much as fantastic nonsense. Your great-grandparents' world was populated by people, animals, and human-scale artifacts. Man was more literally the measure of all things. Important things were assumed to be big and obvious, or at least visible to the senses. Germ theory was one of the first ideas to break that assumption in a serious way. It didn't help that its companion idea, vaccination, was even weirder.

The point is that if you want to do truly "futuristic" work, you can't just extrapolate from what is believed right now. You have to suppose that at least one aspect of our worldview is wrong: something we hold dear is not actually important, or there is something else that should be, or both. If you imagine people feeling and acting exactly the same way as they do now, that's not the future. That's just later on. Also remember that the future is not an ever-upwards spiral. Who would have predicted in 1930 that mass slavery would return to Europe?

The flip side is that these discontinuities make predicting the future hard. The clues to how we may think are camouflaged among thickets of established fact. You have to isolate assumptions underneath our view of the world, alter them, then look around to see what changed. The deeper and more unspoken the assumption, the greater the potential for change. On the surface it sounds like a pretty stupid way to spend your time: disassembling ideas that aren't broken in order to discover ways to break them. On the other hand, that's precisely what tinkers do with machines: break them in order to understand and improve on them. That is, I think, what is meant by the motto "the best way to predict the future is to invent it". The initial spadework is the same whether you are predicting or inventing.

You needn't court-martial everything you think you know, but you do need ways to identify suspicious areas, and tools to dig deeper. So what might they be? There is one that I was mighty proud of inventing, until I described it to a Literature major.

Popular culture as a lens

One way to find these unspoken assumptions is to examine the treatment of a futuristic subject in popular culture, pick out the common themes, and ask whether they make sense. Popular culture is pretty good for this kind of practice, though what you get out of it may not be earth-shattering. A fiction writer's stock-in-trade is ideas that feel right but are not necessarily backed up by evidence.

For example, science fiction overflows with stories about artificial beings. What do they have in common? Well, a common trope is that artificial intelligences are sufficiently human de facto, especially if the story's conflict is about their status de jure. It's rare to find a story about AI which concludes that they are utterly and forever alien. Wintermute employed synthetic emissaries, and spoke casually about its motivations and desires. Agent Smith is explicitly Neo's mirror image. HAL9000's voice was as warm and soothing as a late-night radio host's. The golem of Jewish folklore is an interesting case. They are humanoid but explicitly not intelligent. Their moral position is somewhere between djinns and power tools. Terry Pratchett explored the idea of intelligent golems in "Feet of Clay", and it turned out exactly as you expect it would: after many misunderstandings they assert their rights and join the mainstream of society. It's hard to shake the feeling that AI stories are mostly allegories for racial integration.

We seem to have a hard time coming to grips with intelligence that does not have a face or a voice. When we say "intelligent" in casual speech, we mostly mean "a bloke I can have a conversation with". It may turn out that artificial intelligences are not able to evoke empathy from or experience empathy for natural born humans. I'm not sure I like the idea of what happens when we compete with such beings for resources.

The phrase "artificial intelligence" itself may harbor simplistic assumptions, like calling an X-ray machine a "magic lantern". It's not a bad analogy, but it misses important things like how X-ray waves are generated, other species of exotic radition, and how too much of them will kill you. Imagine a passive fabric of knowledge that, when and only when directed by a human, accomplishes superhuman feats. The conflict would not be over the humanity of the entity in question, but over which humans control it.

So, an unoriginal though interesting prediction: the idea of artificial intelligence having a distinct personality with recognizable motivations and desires is attractive, but there is little evidence that it must happen.

Subtextual subversion

Fans of hard science like to deride Derrida for being pseudo-intellectual, but this literary method is more or less what he was talking about. My wife was very pleased to point that out when I tried to pass it off as my own invention. Deconstructionism got lost in the weeds because they don't use reality to verify their theories, but the basic method seems sound. Can you use it on other bodies of literature, not just fiction?

Over the last ten years, the field of data-mining shifted its focus to gathering enough of the right data instead of just ever more-clever algorithms. I remember reading a lot of papers on automatic text classification in the late 1990s. They drew from a small pool of datasets, such as a collection of news articles. Innovation happened in the algorithms. This was a reasonable idea. Data was hard to come by, and using the same datasets seemed like a good way to compare the performance of different algorithms. The underlying assumption was carried over from other fields of computer science: given a representative sample of data, the way forward is to come up with more sophisticated algorithms.

Researchers at Google were the first I know of to demonstrate value in the opposite: dumb algorithms executed over gigantic datasets. They came to this opinion because they had so much damned data that it was hard enough to count it, much less run O(n³) algorithms over it. So they tried dumb algorithms first, and they worked surprisingly well. Older, naive algorithms turned out to be perfectly valid; they just needed orders of magnitude more input than had been previously tried. I would not be surprised to learn that several people thought of this idea early on. I don't know enough about the field to say.

It's possible you would have hit on the same idea, if you'd analyzed the literature for unspoken assumptions. Or, like Google, you could have played with big problems and new technology while under the gun to produce something useful.

Adopt early and often

Rubbing up against the new is another way to glimpse the future. Just as a child is immersed in a culture and then later derives the rules which shape it, early adopters immerse themselves in new ideas and new technology in order to puzzle out the shape of the future.

Some people are natural early adopters. A friend of mine is busy building an electronic library and giving away his physical books. In eight months he burned through two Kindles and an iPad, and I added a lot of his books to my shelves. The funny thing is that both of us genuinely feel we're getting a good deal: he is divesting his burden of dead trees and space, and I am saving perfectly good books from futuristic folly.

Ours is a classic future/past disagreement. He thinks it's better to move to this new new thing and see how it works. Eventually books will be published on-demand and kept up-to-date just as websites are. Paper will be an option, and not the most popular one. I think that paper is actually a pretty good medium for archival storage. Individuals should act in concert to preserve as much as we can, as more and more of our culture becomes digital-only. I don't know which of us will be right, or both, or neither.

My friend's way to predict the future is to surround himself with new technologies. The new often embodies upcoming disagreements with the present. You still have to do the work of isolating the assumptions it breaks, and deciding whether they are correct. For whatever reason I don't have the temperament for this. My method is to surround myself with early adopters, and watch what they do.

Bring it home

Picture yourself as you were ten years ago. List five things that are different about you now. I'll bet money that most of them are differences in your attitude towards the world. Now picture yourself ten years from today. You probably imagine external qualities: you will be more sucessful, or relaxed, or in Alaska. But most likely the biggest differences will be internal, what Future You thinks is truly important. It's hard to predict exactly what would change. If you knew that, you'd already be on your way to becoming the Future You.