How To Save The Web25 August 2008

A strange as it may sound, public record does not exist on the internet. Consider this: it would be impossible for, say, the New York Times to change something it printed in 1997 -- there are hardcopies all over the world. But for nytimes.com it's as simple as a mouse click. So the internet we have today is public but it's not really a record. Healthy public record is the foundation of a free and literate society. I propose that a loosely-connected network of independent archives, running on personal computers, under the care of self-interested individuals, and sharing common data formats, can in time self-assemble into something that fits the bill. How we got here

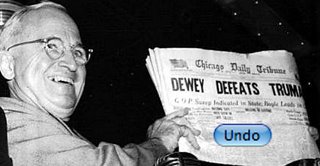

Wouldn't people notice if things went away?Most often they don't, and if they do notice, so what? Revisionism and neglect is low-risk. "Orphan" works are sometimes rescued by their fans. But it's easy to forget that these cases are the exception. Intelligent, influential people say things like this: "Once the Internet knows something, it never forgets. This material just doesn't disappear from the Internet if it's sufficiently interesting." [2] There are serious problems with this idea. The Rosetta Stone didn't survive thousands of years in the desert because of some intrinsic cultural value. It survived because it's made of stone. The UNIX crowd learned this lesson a few years ago to their lasting regret: there are no digital copies of the first four versions of UNIX, only some printouts.[3] Worse is the implication is that if something is not "sufficiently interesting", if it's not part of the story a society wants to tell about itself, it's worthless. The future disagrees about what is and is not important, and why. That's the defining characteristic of the future. No one today cares what the Rosetta Stone actually says [4], yet it is more important to us (as the key to hieroglyphics) than it was to the society that made it. The current situationThe current situation in archives is much like the web: uncoordinated, conflicting, changing. The most widespread problem is a paradoxical attitude: most people understand that a centralized web would be unsustainable, but few seem to carry that logic over to archiving. There are a few public archives. Many national libraries have set up consortia to study the problem. There are search engine caches like Google's. There is the remarkable and far-sighted archive.org. All of them are welcome --in this game, the more the merrier-- but I believe they have various flaws. Google's cache exists for Google's purposes, and is not designed for the long term [5]. The LOCKSS project [6] is commendable in sprit and clever in design, but access to it appears to be limited to select universities and libraries. Archive.org has two handicaps. It's not actually possible for one organization to curate the web. Second, being a non-profit sitting target, they are forced to take down stuff they do save [7] [8] In April 2004 The public editor of the The New York Times spelled out the paper's position in an article titled "Paper of Record? No Way, No Reason, No Thanks". He was speaking more against the obligation to print government notices than the idea of public record per se. But all through it he assumes that (a) someone else will do it, and (b) this information (not to mention copies of his newspaper) will always somehow be available to future historians. At the same time his newspaper was telling archive.org to remove nytimes.com from the collection [9]. What the Archive should be

In short, we need something akin to the web itself: something that can grow without limit, yet does not require much centralized organization. It can be pulled into pieces and operate independently and merge back together. Once it reaches a certain size it, or at least the idea, will be impossible to kill. In a sense the archive already exists, though in a low-energy state. Part of it lives in the browser cache of everyone's computer. These caches are not coherent, organized, searchable, or public. They also have a lot of stuff in there that is better left private. We have to work around that. But it's a start. Dowser's approach"The lost cannot be recovered; but let us save what remains: not by vaults and locks which fence them from the public eye and use in consigning them to the waste of time, but by such a multiplication of copies, as shall place them beyond the reach of accident." Dowser is a program designed to run on a normal personal computer but operate exactly like a website. Its user interface is a fully-functioning website that is hosted by and only accessible to the local machine [10]. This website enables the user to add pages to their local archive, search many search engines at once (aka "metasearch"), search the local archive, add organizational tags, take notes, export and share, etc. It is not intended as a professional tool for research specialists, but as a helpmeet for "power users": journalists, students, writers, etc, who have a demonstrated need for powerful research tools but do not have the time or inclination to train on an academic-grade tool. When a user adds a URL to the archive, Dowser will attempt to retrieve the content of this URL by itself, without reference to any private authentication or "cookies" held by the user. This helps to ensure that private data stays private. Optionally, Dowser will download linked images, videos and such, and any linked pages up to a user-defined "link depth". As time goes on, Dowser will occasionally "ping" the URLs it knows about to determine if they have changed. If so it will download the new copy and thus build up a change history. The program will not allow the user to alter any data in his archive, though it is possible to delete anything. Since the formats are open and simple, a determined user can of course alter the archive data with other tools. We can't stop that, and should not try, but will be an interesting obstacle. Pulling it togetherSo far we have only a local archive. What User 0 reads is saved and available only to User 0. So how do we share it worldwide without inviting spam or violating privacy or running afoul of the law, etc? The seven qualities above are somewhat in conflict with each other. Privacy may conflict with the need to verify copies, decentralization conflicts with system performance conflicts with public access, etc. Centralized archives solve it by doing it all themselves and building up a reputation for trustworthiness. LOCKSS, which is a network of caching servers installed in many universities, depends on the mutual trust of those institutions for verification and limits public access to ward of lawsuits by copyright owners. You and I aren't smart enough to solve the sharing problem. We should start small and listen to the users, to use the much greater imagination of the group. Make tools to allow User 0 to share with User 1, then User 2, etc, but make them orthogonal to the rest. Allow people to extend Dowser and use it in ways we don't expect. The common feature of all of the current archiving schemes is that they are hard for a mere mortal to set up. In theory anyone can download archive.org's software and use it to build their own archive. In practice it's very tricky, and not worth the effort for most people to learn the ancillary skills like programming, systems administration, etc. You can't join LOCKSS without being a major university. Archiving today is a luxury good: complex, balky, expensive, like cars before 1908. When something is a luxury and you want more of it, what do you do? You turn it into a commodity. Make the hard parts easy, do what you can for the harder parts, get something workable into the hands of a much larger group. They will take it from there. Notes

|

carlos@bueno.org

Most Popular

My Projects

|